The HAL repository system has adopted SWORD v2 for remote imports:

Category: SWORD v2

SWORDv2 and DSpace: New Release

Re-posted from: http://cottagelabs.com/news/swordv2-and-dspace-new-release

We’re pleased to be able to say that in the last month we’ve been working hard to get the new version of the SWORDv2 java library and the related DSpace module which uses it up to release quality, in time for the DSpace 4.0 release which will be very soon.

For DSpace, this means we’ve achieved the following:

- Some enhancements to the authentication/authorisation process, and more configurability

- Proper support for the standard DSpace METS package, which had been omitted in the first version of SWORDv2 for various complicated reasons

- Lots more configuration options, giving administrators the ability to fine tune their SWORDv2 endpoint

- Improvements to how metadata is created, added and replaced and how those changes affect items in different parts of the workflow

- Some general bug fixing and refactoring for better code

If you’re not a DSpace user, though, you can still benefit from the common java library; we’ve now released this properly through the maven central repository, and if you want to build your server environment from it, you can include it in your project as easily as:

<dependency>

<groupId>org.swordapp</groupId>

<artifactId>sword2-server</artifactId>

<version>1.0</version>

<type>jar</type>

<classifier>classes</classifier>

</dependency>

<dependency>

<groupId>org.swordapp</groupId>

<artifactId>sword2-server</artifactId>

<version>1.0</version>

<type>war</type>

</dependency>

We hope you have fun with the new software. In the mean time, don’t forget that there’s lots of SWORD expertise hanging out on the sword-app-tech mailing list.

Richard Jones, 15th November 2013

SWORDv2 Compliance: how to achieve it?

Our previous post “SWORDv2 Compliance: what is it and why is it good?” introduced some reasons to make your scholarly systems compliant with SWORDv2, and what that really means. This post covers an approach to achieving SWORDv2 compliance for your particular use case(s), using DataStage and DataBank as examples.

First, if you are implementing a SWORD v2 solution, it’s worth having a passing acquaintance with the specification, at least so you are aware of the standard protocol operations.

An approach that we’ve found works well for designing how to fit SWORD v2 to your workflow is as follows:

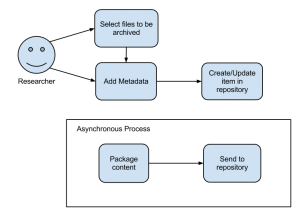

1. Diagram your deposit workflow

Draw a diagram of your deposit workflow, with all the systems and interactions required. Don’t mention SWORD v2 anywhere at this point; let’s make sure that it meets your workflow requirements, not that you fit your workflow to it.

For example, here’s a basic diagram showing how the DataStage to DataBank deposit looks (click to enlarge):

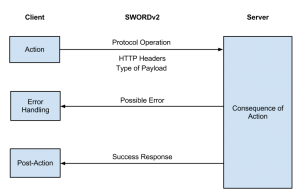

2. Re-draw the diagram around SWORDv2

Re-draw the diagram in the following form (click to enlarge):

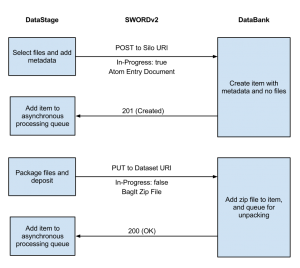

By referring to the spec throughout, you can figure out which SWORD v2 operations with which HTTP headers and what deposit content is required to achieve your workflow. For example, the diagram for DataFlow which integrates DataStage and DataBank looks basically like this (click to enlarge):

3. Utilise the pre-existing code libraries

Take one of the code libraries (which exist in Python, Ruby, Java and PHP for the client-side, and Java and Python for the server-side), and find the methods which implement the SWORD operations that your diagram tells you that you need.

For example, consider some (simplified) Python code required by DataStage to deposit to DataBank:

# create a Connection object

conn = Connection(service_document_url)

# obtain a silo to deposit to (this just gets the first Silo in the list)

conn.get_service_document()

silo = conn.sd.workspaces[0][1][0]

# construct an Atom Entry document containing the metadata

e = Entry(id=dataset_identifier, title=dataset_title,

dcterms_abstract=dataset_description)

# issue a create request

receipt = conn.create(col_iri=silo.href, metadata_entry=e)

It should then be a relatively straightforward task for a programmer to integrate this code into your application workflow.

(This is the second part of a two-part article about SWORDv2 compliance. You can read the first part here: SWORDv2 Compliance: what is it and why is it good?)

What does it mean to be compliant, and why is this a good thing? Where are the edges of SWORD v2 (what is part of the standard, what isn’t)? This blog post aims to address these questions.

What does it mean to be compliant, and why is this a good thing?

A compelling reason to comply with SWORD v2 in your environment is that more and more sector-wide infrastructure is already being built using it, and you will be able to take advantage of it easily. SWORD v2 allows content to easily be moved between scholarly systems. For example, Jisc, via the UKRepNet+ project, are working with EuroPMC and Nature Publishing Group to allow the deposit from their publisher systems directly into digital repositories. It is likely that more cross-organisational bodies will utilise SWORD v2 for parts of their infrastructure in the future, and it will be valuable for repositories (and other systems) to be prepared.

From a technical point of view, compliance just means having implemented the parts of SWORD v2 which are required by the specification. It has a lot of optional components, so it is relatively easy for a software implementation to be compliant.

You can also comply with the specification while also extending it for your own needs. Since SWORD v2 is a profile of AtomPub it can take advantage of all of the extension mechanisms it provides. This means that custom metadata (beyond the Dublin Core explicit in the specification), for example, can be embedded into deposit requests.

A more general benefit to being compliant is that any given client/server pair can understand each other. This doesn’t strictly mean that one will be able to deposit to the other, since a successful deposit implies a common understanding of deposit package formats and metadata, which are not issues that SWORD v2 is designed to deal with. What it means instead is that they can mutually determine whether deposit can take place, and carry it out if so.

Another benefit to complying with SWORD v2 is for reduction of overall effort in supporting deposit. Even though there may be work to do in your client or server environment to support the package and metadata formats for your needs, the transport layer itself is well defined and well supported by software libraries and repository systems. Thus the overall implementation effort for a deposit workflow is significantly reduced.

Where are the edges of SWORD v2?

There are three key principles to bear regarding what SWORD v2 is:

- SWORD v2 is about TRANSPORT – it is for getting content from one place to another, but is agnostic to what that content is (it does not care what the package format or metadata format is)

- SWORD v2 is about ACTIONS – it defines operations that you can do to content on a server (e.g. create, retrieve, update, delete), which can be mapped on to deposit use cases

- SWORD v2 is a SPECIFICATION – it defines ways to carry out deposit actions over HTTP using the semantics of AtomPub, and provides some extensions. It is not, in any way, a piece of software, although there are software implementations of SWORD v2 just as there are software implementations of AtomPub.

There are some things that SWORD v2 does, though, to help out with some of the content related issues. First, it allows you to give your package formats identifiers, which means that a client and server can agree when they support the same format, but the definition of those identifiers is not part of the standard – you can just make them up yourself!

For example, the BagIt format used by DataStage and DataBank is:

http://dataflow.ox.ac.uk/package/DataBankBagIt

Which allows both sides of the deposit to know that they are dealing with a BagIt file of the particular format that they both understand.

Second, it actually does specify a minimum metadata format (Dublin Core) and package format (a simple ZIP file) so that any client/server pair should always be able to transfer binary content, even if they can’t exchange more structured packages or metadata.

What to do if you’re having trouble?

Because SWORD v2 is a specification rather than a piece of software, there are a variety of software implementations in different languages for different purposes, and they all have some variations in how they implement the spec. This means, especially as much of that software is new, that sometimes things won’t go as expected. In those cases there are some things that you can do:

- Come to sword-app-tech, the general SWORD technical discussion forum. Many implementers from different communities hang out there, and general issues with SWORD may get answered.

- Go to your community discussion forum. For example, DSpace has its own implementations of SWORD v1 and SWORD v2, and the developers there might be better placed to help you than on sword-app-tech.

- Check out the SWORD v2 and AtomPub specs – they will tell you what you can do with the standard, and the common code libraries should be sufficiently general to allow you to extend your use of SWORD v2.

(This is the first part of a two-part article about SWORDv2 compliance. You can read the second part here: SWORDv2 Compliance: how to achieve it)

Cottage Labs have written a blog post about their project to add SWORD v2 deposit functionality into the DCC’s DMPOnline tool. This new feature allows data management plans that have been created to then be deposited into a central repository using SWORD v2. By using SWORD v2 (rather than SWORD v1), the data management plans can be re-deposited and updated within the repository as they change.

The code for DMPOnline with Repository Integration is available from: https://github.com/CottageLabs/DMPOnline Cottage Lab’s project page for the project is http://cottagelabs.com/projects/oxforddmponline

Data Deposit Scenarios

This is a bog post detailing some work that the SWORD team (Richard Jones, Stuart Lewis, Pablo de Castro Martin) have undertaken. It describes some work related to research data deposit scenarios.It is hoped that a fuller write-up of this work will be published formally. In addition, a cut down version will be presented at the Open Repositories 2012 conference as a Pecha Kucha session.

Research Data Management (RDM) initiatives are a hot-topic, being driven by factors such as funder and institutional policies, the increasing complexity of dealing with everyday research data, the expectation to be able to perform and share science using collaborative tools such as those available in areas such as social and cloud computing, and the growing popularity of open scholarship. However, although some infrastructures for data management are gradually becoming commonplace, many initiatives remain as yet at a rather embryonic stage.

This post summarises the wide variety of research data transfer processes from a technical point of view as presented in full in our associated paper, with a focus on how a deposit protocol such as SWORD may aid those transfer procedures. It includes a categorisation and description of the data deposit fingerprint concept, specific use cases, technical challenges of implementing these use cases using SWORD v2, and finally highlighting any gaps in the protocol’s abilities.

Use Case Components

Each data deposit use case is made up of a number of elements:

- The data typedigital data in the form of files, metadata, etc

- Data content (DC): we define data as discrete files, which are either deposited on their own, or as a part of a collection of files that make up a given set of data. Such files can vary in size.

- Data description (DD): Data files often have metadata. These describe aspects of the data file, such as where it came from, who created it, when it was made, how large it is, along with other descriptive, administrative, or security elements.

- Data collection description (DCD): A data collection description is a record of the metadata that describes a data collection (rather than describing a single piece of data).

- The source: Data originates from a source, from where it is deposited, such as lab equipment, deposit environment, etc.

- Personal Computer (PC): In many research disciplines the primary tool for the storage, manipulation, and experimentation of data content is the personal computer. Deposits made from a personal computer usually take place over the network to which it is connected. Whilst data is often deposited by the person (or piece of equipment) that created it, sometimes data is deposited by a third party person.

- Laboratory Equipment (LE): Pieces of automated laboratory equipment are able to collect, create, and store data files. They often automatically collect and store metadata about the data. Some laboratory equipment creates discrete data files, whilst others run continuously creating a stream of data.

- Current Research Information System (CRIS): used by research institutions to record the outputs of the research process. Typically this includes publications and activities, but may also involve data files.

- Repository (R): Repositories are often seen as targets for deposit, however they can also be sources, such as with bulk export to another system, multiple deposit, or temporary/staging uses.

- Publishing System (P): Scholarly publishing outputs are typically submitted to publishers through submission systems. In addition to the document to be published, a growing number of journals encourage the publication of related data sets.

- The target: Data is deposited into a temporary or final target system, such as a repository.

- Institutional Repository (IR): Researchers often start dealing with research data management at an institutional level. Since they often already have their local institutional repository available, a common procedure is to create specific collections for filing small data files into the repository.

- Institutional Data Repository (IDR): An institutional data repository is often the next step up from an Institutional Repository for carrying out centralised institutional research data management. The availability of a specific institutional data repository allows for a more comprehensive approach to research data management.

- Geographic (regional / national) Repository (GR): Data management systems are often based around natural groupings, one of which can be geographical.

- Subject Repository (SR): One of the characteristic features of research data management is large differences in the nature of datasets and the procedures for their management among various research disciplines.

- Publication-related Repository (PRR): Publication-related repositories are tied to specific publications. Being associated with publications and publishers, there is often an extra requirement for the close association between the research paper and the referenced dataset.

- Type-specific System (TSS): This is often undertaken so that the solution can have an implicit understanding of the data in order to interact with it in some fashion which would not be possible with a generic or un-customised repository solution.

- Unaffiliated Data Repository (UDR): There is also room in the research data management landscape for independent, unaffiliated data repositories aiming to collect the whole dataset output regardless of institutional, disciplinary or national affiliations of its producers.

- Staging System (SS): Not all data deposits are into final systems where they will be curated over the long term. Some deposits are made into staging systems that hold the data for a temporary period.

- Future interactions: Some data deposit use cases are ‘fire-and-forget’, other use cases cover data that is subject to change, updates, or additions over time

- Final (F): In about two thirds of the survey responses, the deposit of data into a repository was the final step for the data. Following this there was no intention of updating, augmenting, or deleting the data.

- Updates (U): In the other one third of responses, there is a requirement for updates to be made to the deposited data. The updates may be in the form of new data being added, old data removed, or existing data updated.

Data deposit fingerprints

The notion of a data deposit fingerprint is the combination of data type, source, target, and future interaction description (the letters by each part above). When put together, the fingerprint describes what is being deposited, how the deposit takes place, and how the data may change in the future.

Use case examples

A selection of the longer list of examples in the main paper

- JISC RDM DataFlow Project (University of Oxford)

http://www.dataflow.ox.ac.uk/

DataFlow implements a two-stage data management infrastructure: DataStage allows to locally work with, annotate, publish, and permanently store research data, while valuable datasets are preserved and published via the institutional DataBank platform.

Fingerprint: DC-PC-SS-U (DataStage) + DC-PC-IDR-F (DataBank) - Edinburgh DataShare

http://datashare.is.ed.ac.uk/

Edinburgh DataShare is an online digital repository of multi-disciplinary research datasets produced at the University of Edinburgh. Researchers who have produced research data associated with an existing or forthcoming publication, or which has potential use for other researchers, are invited to upload their dataset for sharing and safekeeping. A persistent identifier and suggested citation will be provided.

Fingerprint: DC-PC-IDR-F - LabArchives

http://www.labarchives.com/

LabArchives is a web-based commercial laboratory notebook that allows research groups to manage, securely store and publish their research data. LabArchives has recently signed a partnership with BioMed Central open access publisher to make this platform the default storage system for supplementary data to be published with research papers submitted to BMC journals, see .

Fingerprint: DC-LE-TSS-U/F - Dryad

http://datadryad.org/

Dryad is an international repository of data underlying peer-reviewed articles in the basic and applied biosciences.

Fingerprint: DC-P-PRR-F - arXiv service for dataset storage as suppl file

http://arxiv.org/help/datasets/

arXiv is primarily an archive and distribution service for research articles. arXiv provides support for data sets and other supplementary materials only in direct connection with research articles submitted, either storing them as ancillary files on arxiv or as linked datasets in the DataConservancy subject-based data repository.

Fingerprint: DC-PC-SR-F - The Australian Repositories for Diffraction Images (TARDIS)

http://www.tardis.edu.au/

TARDIS/MyTARDIS is a data repository system for high-end instrumentation data capture from facilities such as the Australian Synchrotron. The program records the data generated by the experiment, catalogues it and transfers it back to the home institution (where the researcher can analyse the data).

Fingerprint(s): DC-LE-SR-U + DD-R-IDR-F - figshare

http://figshare.org/

This unaffiliated platform allows researchers to store (and eventually to share and publish open access) all their research output, including figures, datasets, tables, videos or any other type of materials. Emphasis is made on its potential use for dissemination of unpublished and/or negative results.

Fingerprint: DC-PC-UDR-F

Requirements for data deposit

From the above use cases we can extract a broad set of requirements for deposit of research data and associated collateral:

- Individual files will need to be transferred from the client to the server (DC)

- Groups of multiple files will need to be transferred from the client to the server (DC)

- Some clients will need to be able to stream files over a period of time; for example, when a piece of equipment is producing new measurements which need to be streamed to the server (DC)

- The client/server pair must be able to support the deposit of arbitrarily large files (DC)

- The client must be able to deliver only the content (without supporting metadata) to the server (DC)

- The client must be able to deliver both content and metadata to the server (DC, DD)

- The client must be able to deliver metadata only (without the data) to the server (DD)

- The client must be able to deliver collection metadata to the server (DCD)

- The client/server pair must be able to deal with network scalability issues, such as slow network speed or high latency, in order to adequately support large files. (DC, PC)

- The deposit must be possible from a person who is the originator/custodian of the data (PC, CRIS)

- The deposit must be possible from a non-human user, such as a piece of equipment, automated process or software intermediary (LE, CRIS)

- The deposit must be possible from a person or entity who is doing the deposit on-behalf-of the originator/custodian of the data (PC, LE, CRIS)

- Sometimes objects will need to be delivered to multiple destinations. Therefore the client must be able to deliver the same item to multiple locations. (R, P)

- Sometimes one part of an object will need to be sent to one location, and another part to a different location (for example, data to one location, metadata to another). Therefore, the client must be able to deliver different parts to different places, also taking into account (13). (CRIS, P)

- If different parts of the object go to different locations (e.g. data and metadata) as per (14), it should be possible for each location to know about the other(s). (CRIS, P)

- Further to (15), it should be possible to update an aspect of the object (e.g. the dataset or the metadata) with information regarding a related part of the object or a related work (e.g. the dataset associated with some metadata, or the publication derived from the data). (CRIS, P)

- Sometimes it will be necessary to bulk transfer data between systems, so the protocol should support bulk transfer and data migration. (R, IR, IDR, SS)

- In some cases the deposit repository will be a staging environment for the data pending migration (as per (17)). The client should be able to discover when a server has migrated the data. (R).

- It must be possible for the depositor to select an appropriate deposit target, both the repository itself and the collection within the repository (IR, IDR, GR, SR, PRR, TSS, UDR, SS)

Gaps in SWORD support

Most of the requirements can be met with SWORDv2 as it is, but some of them cannot. This section picks out requirements where support within SWORD is either lacking or insufficient, and suggests possible approaches.

3. Some clients will need to be able to stream files over a period of time; for example, when a piece of equipment is producing new measurements which need to be streamed to the server (DC)

We must consider the possibility that each new bit of content being streamed by the client is appended to an existing file on the server: this behaviour is currently unsupported in SWORD.

4. The client/server pair must be able to support the deposit of arbitrarily large files (DC)

Because of the need to support large files or deposit-by-reference situations, both of which may require post-processing after the deposit has completed, the standard 201 (Created) response by the server might not be appropriate, and a 202 (Accepted) would be better (along with enhancements to error handling for such deposits), which is not currently available in SWORD. Further, it is unclear whether deposit-by-reference payloads can be considered Packages in the SWORD sense, so some clarification is needed.

8. The client must be able to deliver collection metadata to the server (DCD)

There are no options within SWORD (or Atom) to update the collection metadata.

10. The deposit must be possible from a person who is the originator/custodian of the data (PC, CRIS)

While it is clear in the SWORD specification that authentication and authorisation are covered (Section 8), it is worth noting that the profile says nothing about alternative authentication and authorisation mechanisms such as OAuth.

13. Sometimes objects will need to be delivered to multiple destinations. Therefore the client must be able to deliver the same item to multiple locations. (R, P)

and

14. Sometimes one part of an object will need to be sent to one location, and another part to a different location (for example, data to one location, metadata to another). Therefore, the client must be able to deliver different parts to different places, also taking into account (13). (CRIS, P)

Is there anything that SWORD can do to support the multiple-deposit-target requirement, such that it is easier for multi-depositing client environments to operate?

15. If different parts of the object go to different locations (e.g. data and metadata) as per (14), it should be possible for each location to know about the other(s). (CRIS, P)

and

16. Further to (15), it should be possible to update an aspect of the object (e.g. the dataset or the metadata) with information regarding a related part of the object or a related work (e.g. the dataset associated with some metadata, or the publication derived from the data). (CRIS, P)

The main issue lacking with SWORD for this requirement is clarity in the mechanism by which items on servers know about other items which are either equivalents or additional closely related materials (e.g. the data and the associated metadata in two separate locations).

17. Sometimes it will be necessary to bulk transfer data between systems, so the protocol should support bulk transfer and data migration. (R, IR, IDR, SS)

Although SWORD doesn’t technically prevent bulk transfer, we should consider options which would make this process easier.

18. In some cases the deposit repository will be a staging environment for the data pending migration (as per (17)). The client should be able to discover when a server has migrated the data. (R).

Where data is moved after deposit, the client will need a way to discover the new Edit-IRI. The server can use a 303 (See Other) response which the profile permits although does not explicitly cover, nor does it mention any other details around performing an HTTP GET on the Edit-IRI which could clarify such situations.

19. It must be possible for the depositor to select an appropriate deposit target, both the repository itself and the collection within the repository (IR, IDR, GR, SR, PRR, TSS, UDR, SS)

Although SWORD uses Service Documents so that clients can discover deposit targets within a repository, it does not include anything for discovery of repositories, and it is worth exploring whether there is anything that SWORD could do to improve the discovery options.

Recommended Actions

Proposed profile changes

- Add support for 202 (Accepted) response codes to appropriate deposit options, to allow for asynchronous processing of deposits such as large files or by-reference deposits. (see requirement 4)

- Add more detail on listing the contents of SWORD Collections (section 6.2 of the profile), as per the DepositMO extensions (see requirement 17)

- Add a new section to the profile explicitly covering GET on the Edit-IRI and supporting the use of 303 (See Other) (see requirement 18)

Community discussion points

- Handling of streaming content

- By-Reference deposit

- Error handling for asynchronous operations

- Addition of collection metadata

- Reference to equivalent versions and other object parts

- Bulk transfer

Possible further investigations

- SWORD has reached a point where discussion of further authentication and authorisation approaches – and in particular OAuth – may be worthwhile. (see requirement 10)

- Multiple-deposit-target use cases are becoming increasingly prevalent, and it would be worth exploring this requirement in some detail along with the existing services in this area to see if there is anything SWORD can do to improve the situation. (see requirements 13, 14)

- Further investigation is necessary on the “staging repository” requirement (18), and whether there is a genuine need for this functionality and if so if there’s anything additional the profile can do to support it.

- To aid discovery of SWORD compliant repositories and their service documents it would be valuable to work with services such as OA-RJ and the open access repository registries such as OpenDOAR and ROAR to find out if there is further work the profile can do to improve discoverability. (see requirement 19)

Originally published at: http://isc.ukoln.ac.uk/2012/03/20/launch-of-sword-version-2/

The second version of the SWORD resource deposit protocol, designed primarily to enable the deposit of scholarly works into content repositories, has now been released. Developed with funding from the JISC, the SWORD v2 project has built upon the successful and award winning SWORD deposit protocol to now support the full deposit lifecycle of deposit, update, and deletion of resources. In addition of the new technical standard, implementations for some of the most well-known repository platforms have been created, along with client toolkits and exemplar demonstrators.

Led by Richard Jones (Technical Lead) of Cottage Labs and Stuart Lewis (Community Manager), formerly of the University of Auckland, now of the University of Edinburgh along with oversight from Paul Walk from the UKOLN Innovation Support Centre, the project was steered by an international technical advisory group of 36 experts in the areas of repositories, standards development, and scholarly content.

The development of the standard will allow a new breed of smart deposit scenarios and software products that are able to deposit content into one or more repositories, track the deposits over time, interact with them and update, or remove them. Before SWORDv2, deposit interoperability took the form of single deposits, coined as ‘fire and forget’. Once a deposit had been made, no further interaction was possible. SWORD v2 extends its support for the Atom Publishing Protocol (AtomPub) by adding support updates and deletions. These will enable new interoperable deposit management systems to be built that will work with any SWORD v2 compliant system.

Deposit use cases which are now possible include collaborative authoring, or getting more integrated with the publication workflow where multiple updates and versions of documents are required. Further details can be found at the SWORD website: https://sword.cottagelabs.com/ or by emailing info@swordapp.org

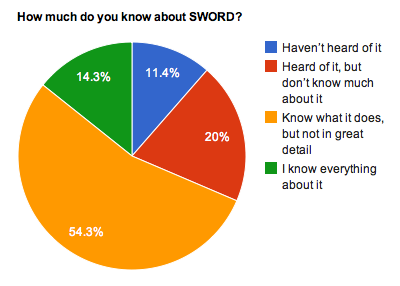

We recently posted a survey about ‘Data Deposit’. The survey is still open if you wish to respond.

The first question in the survey asks:

- “How much do you know about SWORD?”

The SWORD v2 project has been asked by the JISC to look into the applicability of the SWORD protocol for depositing Research Data. The SWORD protocol has always been agnostic about the type of resource it is depositing, however its initial development stemmed from a requirement for the deposit of scholarly communications outputs into repositories – these typically being small text-based items.

In order to investigate how well SWORD and SWORD v2 would deal with Research Data, we need to know about the different types of research data that you are working with. This will allow us to discover some of the range of different data types in use, and the general and specific requirements of each.

We’ve tried to keep the survey short – it is only 9 questions long. If you have a few minutes to share some information with us about the data you work with, we would very much appreciate it.

Visit the survey at https://sword.cottagelabs.com/sword-v2/sword-v2-data-deposit-survey/

SWORD v2 ready to use!

As part of the SWORD v2 project finding, resources were allocated to implement it in a number of repository platforms. First off the block to release SWORD v2 implementations as part of their core functionality are EPrints and DSpace:

- Download: EPrints 3.3.6+

- Download: DSpace 1.8+